NAS migration from TrueNAS to Ubuntu

I've been using FreeNAS/TrueNAS for over 8 years, and while it works great as a NAS, some of the idiosyncrasies made me decide to migrate to Ubuntu:

-

TrueNAS is based on FreeBSD, and while it's similar to Linux, it's not 100% the same.

-

The feature set of TrueNAS is overkill for my needs.

- I'm simple home user.

- I'm the only one that logs in, so I don't need any authentication methods other than local.

- I use TrueNAS for home backups and some simple directory sharing.

- The only connections are from the local network (192.168.1.x/24)

-

I've run into stability issues with the TrueNAS virtual machines (various OS in various VMs).

-

TrueNAS is based on BSD, which I know little about.

- While Linux and BSD are similar, I've run into more than a couple of differences that made my life difficult.

-

No Docker support.

-

Near total reliance on the web GUI. Web GUIs are nice, but I expect a CLI command(s) that will accomplish the same thing to be available.

-

TrueNAS SCALE, based on Debian Linux is currently in beta, but still more than I need for a home user.

- The TrueNAS SCALE Docker implementation is changed enough to make it proprietary to TrueNAS.

- Too many technological things in TrueNAS Core/SCALE are specific to that brand, which makes it harder to troubleshoot.

- I don't need/want to learn TrueNAS, but rather the technology being used, such as KVM, Docker, Kubernetes, etc.:

TrueNAS --> Ubuntu 20.04 migration

Pre-testing output in TrueNAS

-

In TrueNAS, display information about all storage pools:

zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT VD01 14.5T 8.36T 6.14T - - 16% 57% 1.00x ONLINE /mnt freenas-boot 111G 5.65G 105G - - - 5% 1.00x ONLINE - -

In TrueNAS, display storage pool properties:

zpool get all VD01 NAME PROPERTY VALUE SOURCE VD01 size 14.5T - VD01 capacity 57% - VD01 altroot /mnt local VD01 health ONLINE - VD01 guid 15889708516376535445 - VD01 version - default VD01 bootfs - default VD01 delegation on default VD01 autoreplace off default VD01 cachefile /data/zfs/zpool.cache local VD01 failmode continue local VD01 listsnapshots off default VD01 autoexpand on local VD01 dedupratio 1.00x - VD01 free 6.14T - VD01 allocated 8.36T - VD01 readonly off - VD01 ashift 0 default VD01 comment - default VD01 expandsize - - VD01 freeing 0 - VD01 fragmentation 16% - VD01 leaked 0 - VD01 multihost off default VD01 checkpoint - - VD01 load_guid 6534596139609637702 - VD01 autotrim off default VD01 feature@async_destroy enabled local VD01 feature@empty_bpobj active local VD01 feature@lz4_compress active local VD01 feature@multi_vdev_crash_dump enabled local VD01 feature@spacemap_histogram active local VD01 feature@enabled_txg active local VD01 feature@hole_birth active local VD01 feature@extensible_dataset enabled local VD01 feature@embedded_data active local VD01 feature@bookmarks enabled local VD01 feature@filesystem_limits enabled local VD01 feature@large_blocks enabled local VD01 feature@large_dnode disabled local VD01 feature@sha512 enabled local VD01 feature@skein enabled local VD01 feature@userobj_accounting disabled local VD01 feature@encryption disabled local VD01 feature@project_quota disabled local VD01 feature@device_removal enabled local VD01 feature@obsolete_counts enabled local VD01 feature@zpool_checkpoint enabled local VD01 feature@spacemap_v2 active local VD01 feature@allocation_classes disabled local VD01 feature@resilver_defer disabled local VD01 feature@bookmark_v2 disabled local VD01 feature@redaction_bookmarks disabled local VD01 feature@redacted_datasets disabled local VD01 feature@bookmark_written disabled local VD01 feature@log_spacemap disabled local VD01 feature@livelist disabled local VD01 feature@device_rebuild disabled local VD01 feature@zstd_compress disabled local

Exporting the ZFS storage pool(s) from within TrueNAS

-

From a *nix perspective, you could use

zpool exportto export the ZFS storage pool, and assuming the storage pool is named tank:zpool export tank cannot unmount '/export/home/eschrock': Device busyzpool export -f tankOnce the above command is executed, the pool tank is no longer visible within TrueNAS.

-

However, this is TrueNAS, so the web GUI is preferred, and in some cases, the only way to get things to work properly (I've written about his previously).

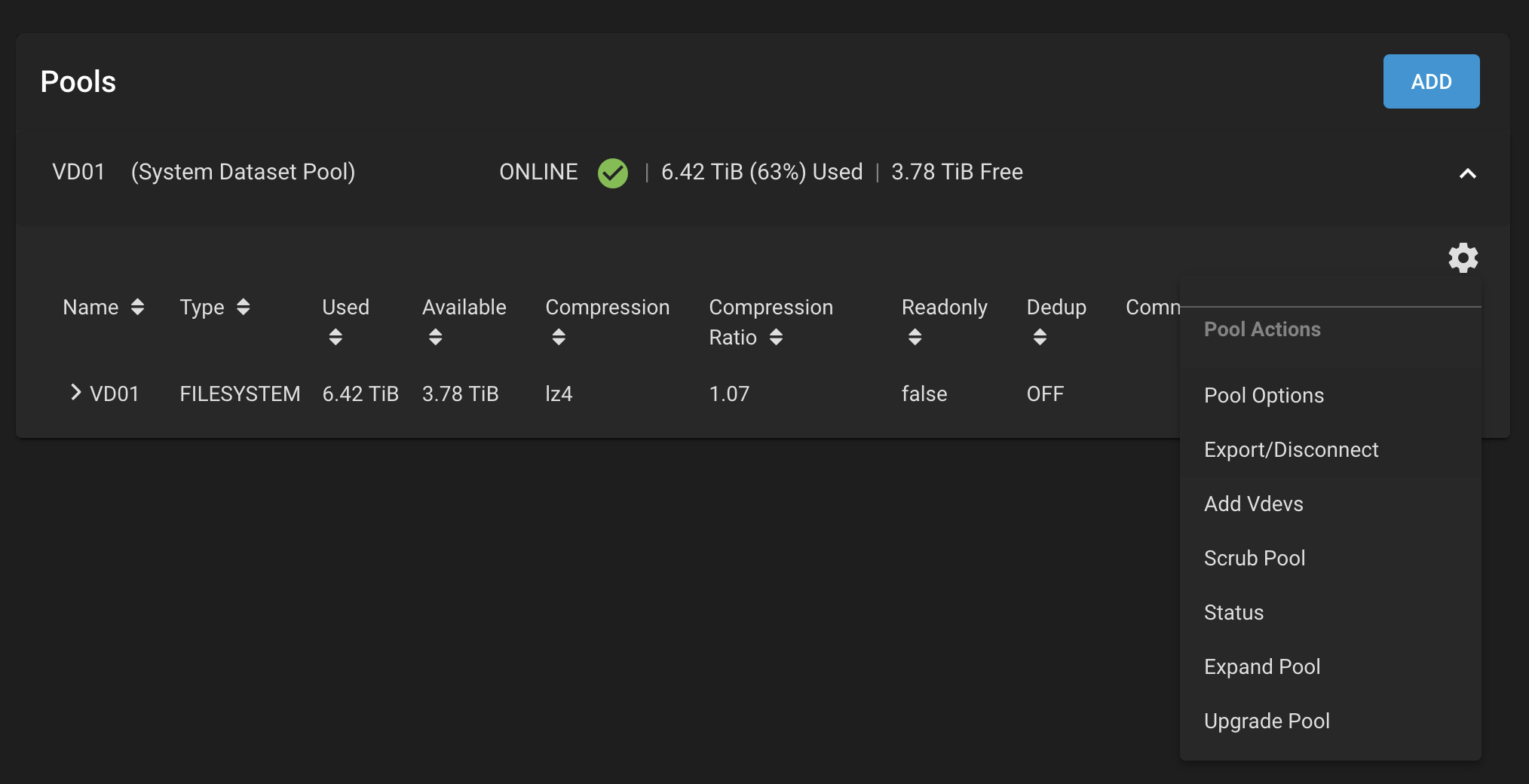

In the web GUI, go to Pools, select the pool to export, then click on the gear icon to the right, and select Export/Disconnect.

It's helpful to shut down everything that is currently using anything from within the ZFS storage pool, which in my case, included Plex, a couple of VMs, and docker instances.

Verifying the ZFS storage pool(s) in Ubuntu

-

Installed Ubuntu Server 20.04 on a USB stick for testing.

-

Install ZFS utilities:

zfs list Command 'zfs' not found, but can be installed with: sudo apt install zfsutils-linux # version 0.8.3-1ubuntu12.12, or sudo apt install zfs-fuse # version 0.7.0-20As I want the newest version in Ubuntu, to match the supported flags from Truenas, I installed zfsutils-linux.

sudo apt install zfsutils-linux -y -

As root, display ZFS storage pool information:

zpool import pool: VD01 id: 15889708516376535445 state: ONLINE status: Some supported features are not enabled on the pool. action: The pool can be imported using its name or numeric identifier, though some features will not be available without an explicit 'zpool upgrade'. config: VD01 ONLINE raidz1-0 ONLINE sde2 ONLINE sdf2 ONLINE sdd2 ONLINE sdc2 ONLINEI wanted the ZFS storage pool mounted within /mnt, so I used the -R switch:

zpool import -R / VD01Check on the status of the newly imported ZFS storage pool:

zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT VD01 14.5T 8.36T 6.14T - - 16% 57% 1.00x ONLINE /mntdf -h /mnt/VD01 Filesystem Size Used Avail Use% Mounted on VD01 5.9T 2.1T 3.8T 36% /mnt/VD01Check on the flags supported/enabled on Ubuntu:

zpool get all VD01 NAME PROPERTY VALUE SOURCE VD01 size 14.5T - VD01 capacity 57% - VD01 altroot /mnt local VD01 health ONLINE - VD01 guid 15889708516376535445 - VD01 version - default VD01 bootfs - default VD01 delegation on default VD01 autoreplace off default VD01 cachefile none local VD01 failmode continue local VD01 listsnapshots off default VD01 autoexpand on local VD01 dedupditto 0 default VD01 dedupratio 1.00x - VD01 free 6.14T - VD01 allocated 8.36T - VD01 readonly off - VD01 ashift 0 default VD01 comment - default VD01 expandsize - - VD01 freeing 0 - VD01 fragmentation 16% - VD01 leaked 0 - VD01 multihost off default VD01 checkpoint - - VD01 load_guid 534499447540406522 - VD01 autotrim off default VD01 feature@async_destroy enabled local VD01 feature@empty_bpobj active local VD01 feature@lz4_compress active local VD01 feature@multi_vdev_crash_dump enabled local VD01 feature@spacemap_histogram active local VD01 feature@enabled_txg active local VD01 feature@hole_birth active local VD01 feature@extensible_dataset enabled local VD01 feature@embedded_data active local VD01 feature@bookmarks enabled local VD01 feature@filesystem_limits enabled local VD01 feature@large_blocks enabled local VD01 feature@large_dnode disabled local VD01 feature@sha512 enabled local VD01 feature@skein enabled local VD01 feature@edonr disabled local VD01 feature@userobj_accounting disabled local VD01 feature@encryption disabled local VD01 feature@project_quota disabled local VD01 feature@device_removal enabled local VD01 feature@obsolete_counts enabled local VD01 feature@zpool_checkpoint enabled local VD01 feature@spacemap_v2 active local VD01 feature@allocation_classes disabled local VD01 feature@resilver_defer disabled local VD01 feature@bookmark_v2 disabled local

Features/flags comparison results

I simply used sdiff on the output of zpool get all VD01 from both TrueNAS and Ubuntu.

Ubuntu has a single feature/flag that TrueNAS doesn't, but it's disabled:

feature@edonr disabled

TrueNAS has a number of features/flags, but they're also all disabled:

feature@redaction_bookmarks disabled

feature@redacted_datasets disabled

feature@bookmark_written disabled

feature@log_spacemap disabled

feature@livelist disabled

feature@device_rebuild disabled

feature@zstd_compress disabled

On very important item I noticed after using the sdiff command, is that in Ubuntu, at least on the USB-stick install I tested with, is that I didn't have a cachefile configured in Ubuntu.

VD01 cachefile /data/zfs/zpool.cache | VD01 cachefile none

To create it in Ubuntu:

zpool set cachefile=/etc/zfs/zpool.cache VD01

References

Oracle Solaris ZFS Administration Guide - Chapter 4 Managing Oracle Solaris ZFS Storage Pools https://docs.oracle.com/cd/E19253-01/819-5461/gaynp/index.html

OpenZFS - FAQ https://openzfs.github.io/openzfs-docs/Project%20and%20Community/FAQ.html#the-etc-zfs-zpool-cache-file